Today we will look at a common C++ interview problem: Least Frequently Used cache.

Implement an LFU cache data structure with a limited capacity that provides two O(1) (average) methods: int get(int key) and void put(int key, int value). When the cache runs out of capacity, the put method should drop the least frequently used key (both get and put calls count as use), if there is a tie, the least recently used key should be dropped.

Before you continue reading the solution, I encourage you to try to solve it yourself. Here is a Compiler Explorer link with a couple of test cases: https://compiler-explorer.com/z/T91ezqoh5.

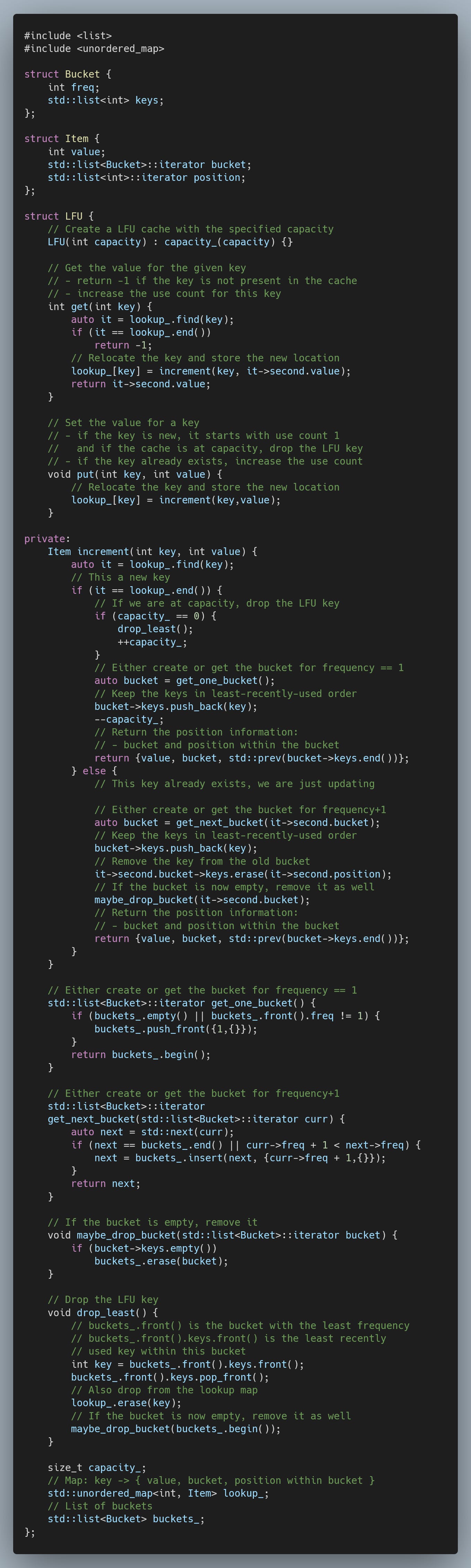

Solution

When designing a data structure, the first thing to consider is what operations we must provide at the requested big-O complexity. This will typically dictate what components we need to use in our design.

For our LFU cache, we need to be able to drop the least frequently used key (in O(1)), meaning that we must keep the keys in sorted order. On top of that, since many keys can have the same frequency, we must keep the keys with the same frequency in the least recently used order.

When we access a key (either through get or put), we also need to be able to relocate it within our sorted data structure in O(1). Potentially this relocation may need to skip over many other keys.

These features heavily limit what possibilities we have. We must use a std::list for the fast relocation of elements, and we can combine that with a std::unordered_map to look up information based on a key.

However, we need one more trick. We must bucket the data by frequency to relocate keys across many other keys. Moving a key to a higher frequency, we can then relocate it to the bucket with frequency+1, making sure to keep around only buckets that are not empty (limiting the number of buckets to the LFU cache capacity).